Which Attribute to Choose? (Part1)

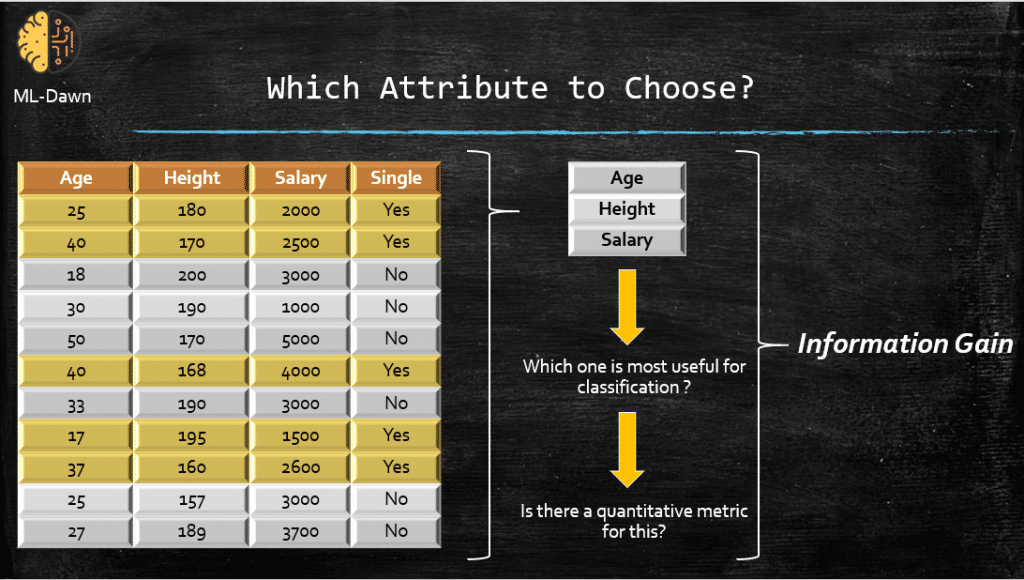

In our last post, we introduced the idea of the decision trees (DTs) and you understood the big picture. Now it is time to get into some of the details. For example, how does a DT choose the best attribute from the dataset? There must be a way for the DT to compare the worth of each attribute and figure out which attribute can help us get to more pure sub-tables (i.e., more certainty). There is indeed a famous quantitative measure for this called Information Gain. But in order to understand it, we have to first learn about the concept of Entropy. As a reminder here is our training set:

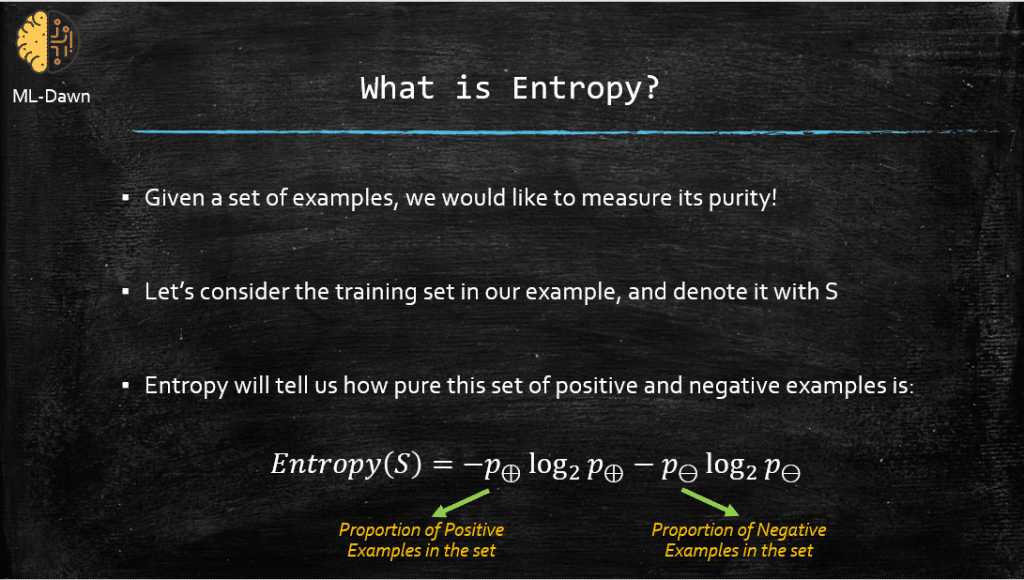

What is Entropy?

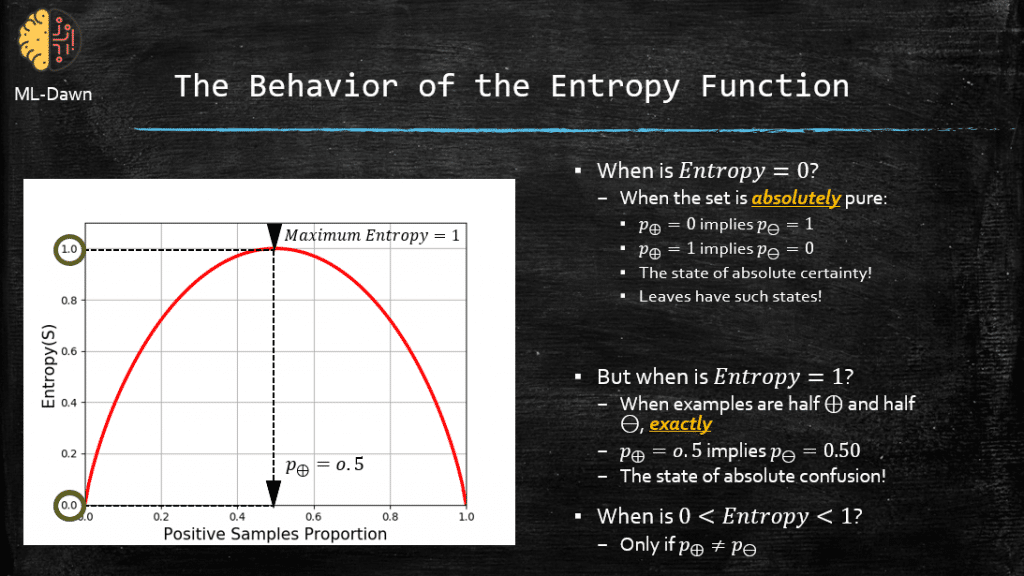

On the horizontal axis we can see the proportion of positive examples (i.e., 1 – Proportion of negative examples) in the set S. On the vertical axis we can see the Entropy of the set S. It seems that this function has indeed a maximum, and it hits 0 ONLY in 2 cases.

In a binary classification problem, when Entropy hits 0 it means we have NO entropy and S is a pure set. So, the members of S are either ALL positive or ALL negative. This is where we have absolute certainty and if you remember in our last post, the leaves of a decision tree are in such state of purity and certainty.

The entropy function for a binary classification has the maximum value of 1. This is the state of utter confusion and highest disorder and entropy. This happens when our set S is EQUALLY divided into positive and negative examples. Meaning that P(+) = 0.5 which automatically implies that P(-) = 0.5.

Finally, in a binary classification problem, if P(+) and P(-) are NOT equal, for example P(+) = 0.7 and P(-) = 0.3, the Entropy is ALWAYS between 0 and 1.

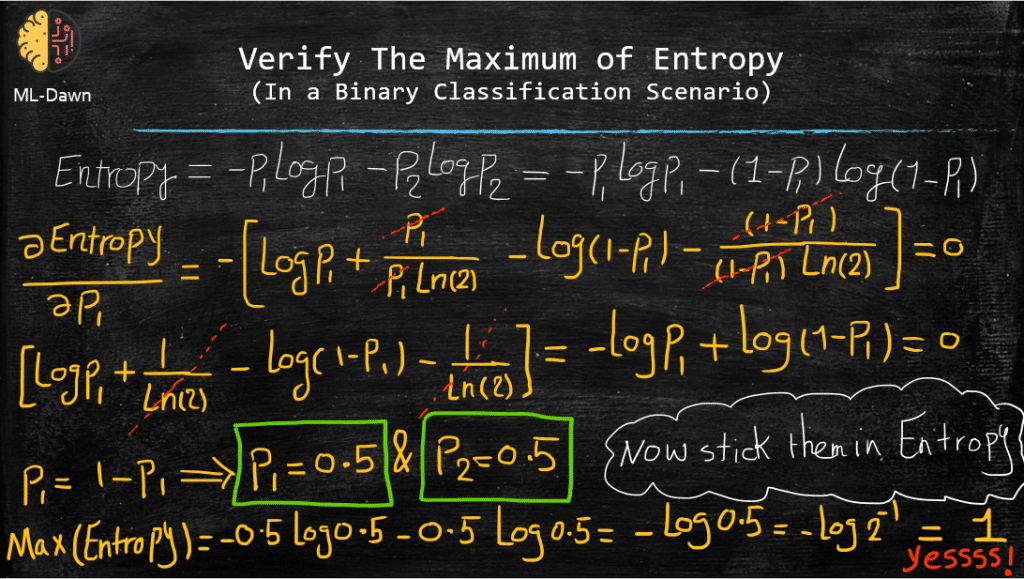

I can actually prove to you that the Entropy for a binary classification problem has a maximum of 1 If and Only If p(+) = P(-) = 0.5. In order to do this, we will have to take the partial derivative of Entropy with respect to one of the proportions (say P(+)), and set it to 0. Then, when we find the critical value for P(+) we can easily find P(-) as we know that: P(+) = 1 – P(-). Finally, by plugging in these critical values for P(+) and P(-) in the Entropy function, we can find the maximum value of the Entropy in the binary classification problem. Below, you can see all the math involved:

This is really cool! Now, we have mathematically proven that ONLY if we have equal proportions between positive and negative example, will the Entropy be maximized and we have determined that maximum value to be exactly 1.

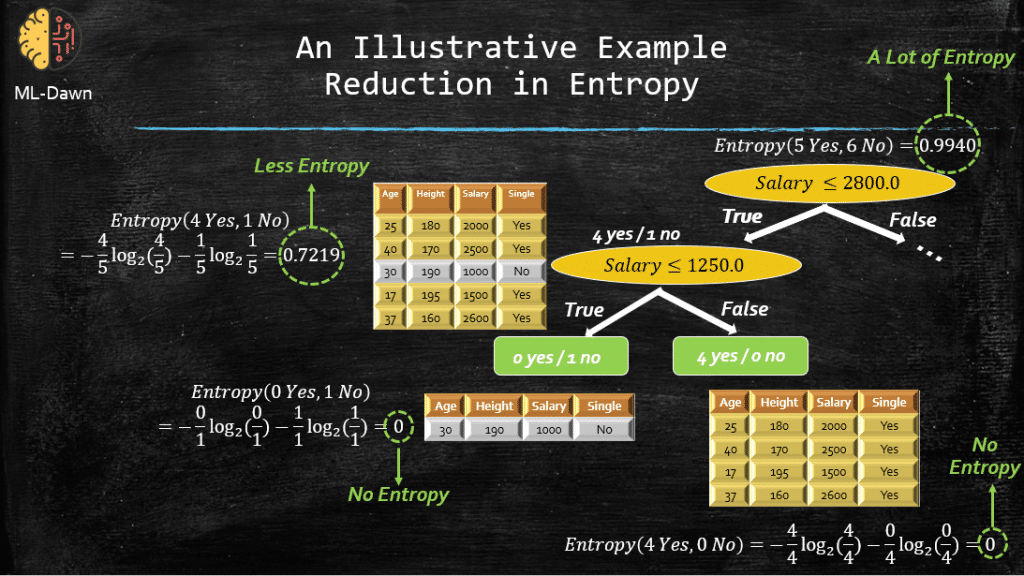

An Illustrative Example

Now, we understand what entropy is as we can apply it to our own training set. Let’s see if we can compute the entropy of our training set. But before we do, what do we expect to get? Should the entropy be close to 1 or close to 0? (hint: compare the 2 proportions)

So this is the starting point where the decision tree (DT) is going to start the training process. Now, in our last post, I showed you how the DT is trained but I never mentioned the concept of Entropy! Now that you know what Entropy is, let’s incorporate that and see whether the DT is trying to increase or decrease the Entropy during the training process. Let’s just focus on the left side of our trained DT and see how the Entropy changes:

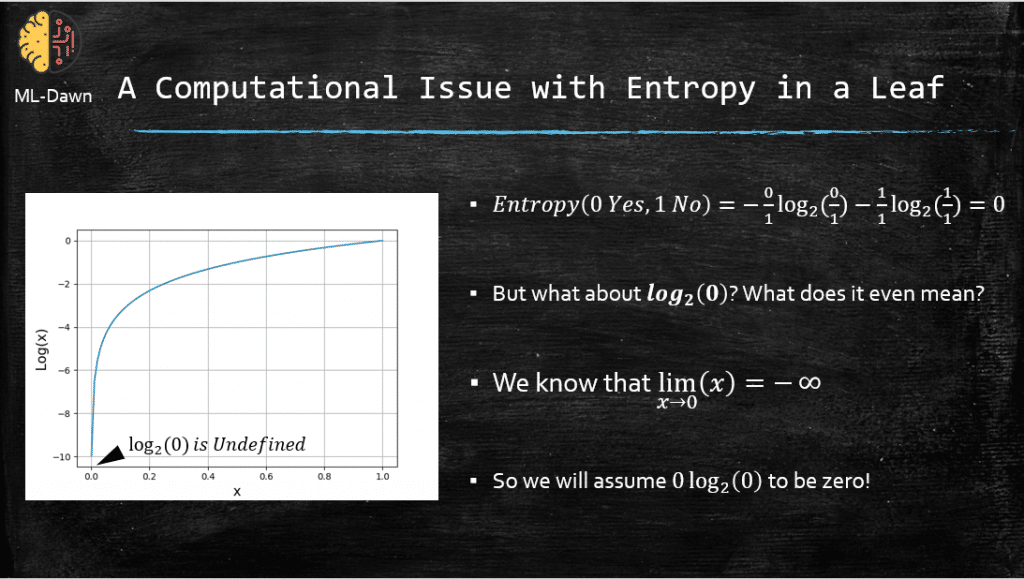

An Issue with Computing Entropy

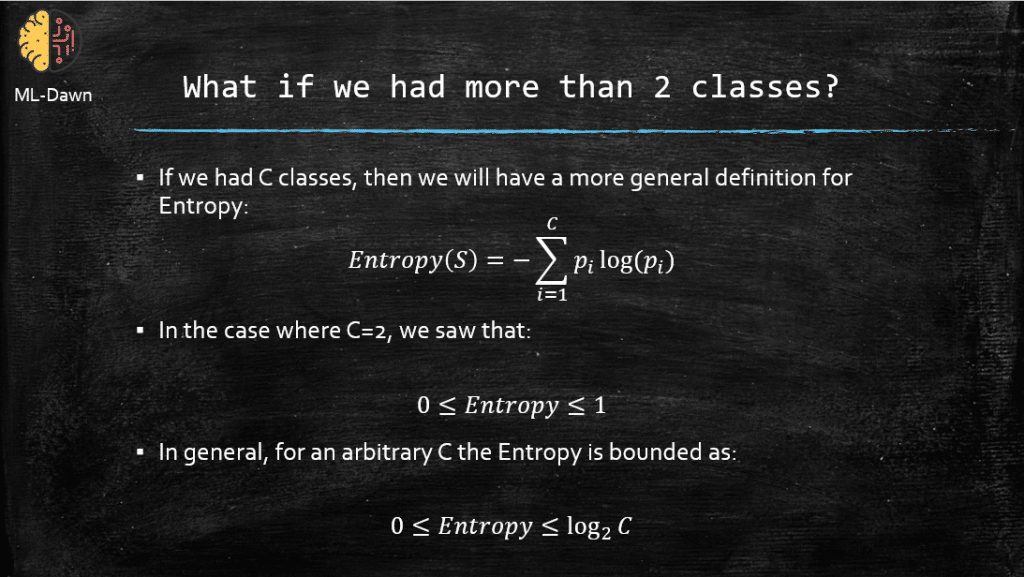

What if we had More than 2 Classes?

So far we have been simplifying things and just considered the 2 class case where we have only 2 class labels: Positive and Negative. Let’s get real now! If we have C number of classes, then the formula for the Entropy will look different alittle bit. Moreover, the Entropy can get higher than 1 now! More precisely, the the maximum of Entropy will be log(C)! As a result, if C=4, then maximum Entropy that we could ever observe will be log(4) which is 2! But again the minimum Entropy that we could ever reach to is 0. Check it out:

In the next post, I will tell you how Information Gain uses Entropy to help the Decision tree decide on which attribute to split the dataset on. Spoiler alert: Which attribute will reduce the Entropy the most if we did the splitting on it!

Until then, take care 🙂

MLDawn