A Quick Recap

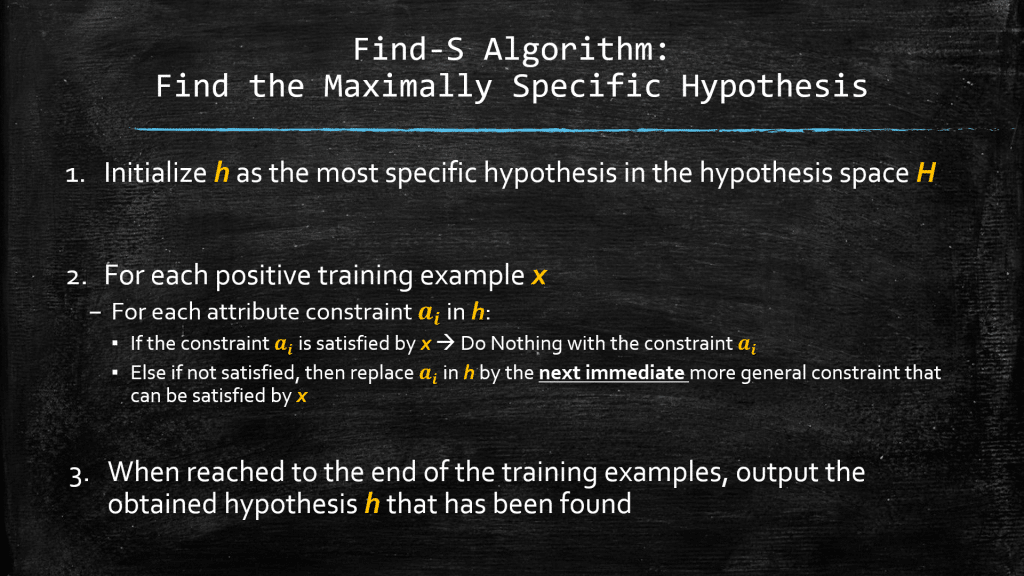

Hello everyone and welcome! In our previous post, we talked about the first algorithm that uses the “more-general-thank-or-equal-to” operation to smooth out the search in the hypothesis space: Find-S Algorithm. As a reminder, below you can see the steps in this algorithm:

Like all the other algorithms, and I mean it when I say “all of them”, this algorithm too has some issues that makes it not so perfect. In this short post, we will talk about these issues and draw your attention to the inherent drawbacks of the Find-S algorithm that you might have noticed as well by now! Enjoy!

Find-S is Not so Perfect!

Something that I have not shared with you yet is that our defined hypothesis space, H, that we have been working with so far, is only one example of the innumerous types of hypothesis spaces out there! Namely, we have defined H as a space replete with the hypotheses that are defined as conjunctions of constrained on the attributes of the training data, at hand!

In such hypothesis spaces, the Find-S algorithm is guaranteed to converge to the most specific hypothesis in H that is consistent with the positive examples (it will also be consistent with the negative examples as we have discussed previously!), if and only if:

- There is indeed a target hypothesis in H to be found. As we discussed, this target concept is guaranteed to be more-general-than-or-equal-to the hypothesis h that Find-S will eventually learn.

- There is no error (i.e., noise) in the data, specifically in the labels! This can throw off Find-S disastrously!

So, let’s now talk about the unanswered questions about this famous, and not so perfect, algorithm:

- How many hypotheses are out there in H that are consistent with the training examples? We must realize that even though Find-S finds a hypothesis consistent with the training examples, this is NOT the guarantee that it has converged to the target concept! Meaning that the hypothesis found by Find-S might not be the ONLY hypothesis in our hypothesis space H that is consistent with our training examples! So, some other consistent hypothesis might be a better solution but Find-S doesn’t bother with that! We would like our search algorithm to have a mechanism to characterize its uncertainty regarding the target concept (i.e., its true identity).

- In case the previous concern holds and there are indeed multiple hypotheses that are consistent with the training examples, the question is, whether the most specific hypothesis should be preferred over a more general one! Remember that when we find a hypothesis consistent with our training examples, we are hoping that it would generalize well to the unseen data in the future. If it is the most specific hypothesis, there is every chance that it has basically memorized the training examples and could not generalize well to the unseen data that it will encounter in the future. Remember that our training examples are just a small subset of the instance space, and we don’t have any idea about other possibilities that are out there in the instance space! So, we do what we can do! And it is find a hypothesis that is consistent with our training examples and still hopefully consistent with some other data that we might encounter in the future from the instance space. This is why, the most specific hypothesis (i.e., with respect to the training examples at hand), might not be a good idea: The danger of inability to generalize to the future unseen data. This is also called the problem of over-fitting the training data!

- Are the training examples error free? Here is the answer: in most cases no! We might have some noisy labels, meaning that the values of the target concept in our training examples, could simply be wrong! So, think about it! Find-S might try to generalize the current hypothesis just to make it satisfiable by a training example that has been mistakenly labeled as a positive example, whereas it is truly a negative example! Now, any negative examples that could happen in the future that are similar to these mistakenly labeled examples, will also satisfy the hypothesis. Basically, h will classify them as positive! This is not good!

- One important point in Find-S algorithm is that: It does NOT specify how to generalize! The way we have described the method for generalization in our example here, is that for every attribute we consider a Ø constraint, should that fail for any positive example in our training set, we generalize it by setting this constraint to the exact value that the attribute for the current training example has, and if for the next positive example, this constraint failed as well, we changed the constraint to the ultimate ? constraint that accepts literally everything. Good! However, one thing we did not mention to you is that in our example, where we want to learn the concept of play, the way we have described H (i.e., the hypothesis space), in in a way that we know for sure that there is only 1 maximally specific hypothesis h in H, that is consistent with the training examples, and we know that Find-S will find it for sure. Ready for the surprise?! Behold: “There are many different kinds of hypothesis spaces that one could define!”. As a result, in those hypothesis spaces, there could be several maximally specific hypotheses that are consistent with the training examples. We would like Find-S to take caution in the way it generalizes the constraints and makes use of the ≥g operation! This means that if Find-S were to always generalize the hypotheses based on the hypothesis language in 1 particular hypothesis space, it might simply fail to converge to the target concept! As a result, Find-S needs to be extended so that it could backtrack on its choices on how to generalize a hypothesis and consider the possibility that the true concept might be along a different branch of the ≥g operation than the branch it has selected to search! We will discuss such hypothesis spaces later.

Author: Mehran

Dr. Mehran H. Bazargani is a researcher and educator specialising in machine learning and computational neuroscience. He earned his Ph.D. from University College Dublin, where his research centered on semi-supervised anomaly detection through the application of One-Class Radial Basis Function (RBF) Networks. His academic foundation was laid with a Bachelor of Science degree in Information Technology, followed by a Master of Science in Computer Engineering from Eastern Mediterranean University, where he focused on molecular communication facilitated by relay nodes in nano wireless sensor networks. Dr. Bazargani’s research interests are situated at the intersection of artificial intelligence and neuroscience, with an emphasis on developing brain-inspired artificial neural networks grounded in the Free Energy Principle. His work aims to model human cognition, including perception, decision-making, and planning, by integrating advanced concepts such as predictive coding and active inference. As a NeuroInsight Marie Skłodowska-Curie Fellow, Dr. Bazargani is currently investigating the mechanisms underlying hallucinations, conceptualising them as instances of false inference about the environment. His research seeks to address this phenomenon in neuropsychiatric disorders by employing brain-inspired AI models, notably predictive coding (PC) networks, to simulate hallucinatory experiences in human perception.

Responses