What is an Algorithm?

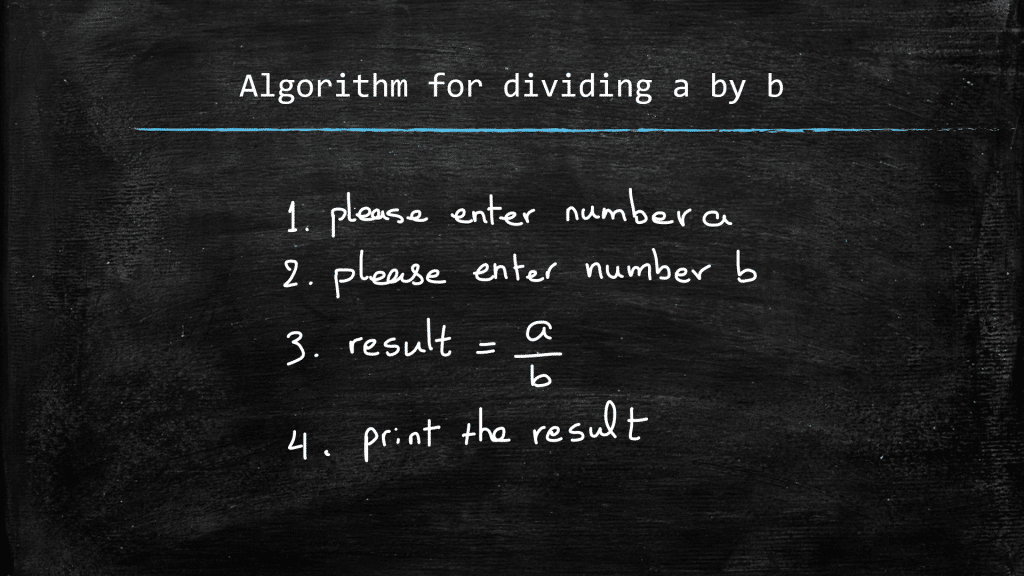

An algorithm describes the way to do something, step-by-step in the necessary order! As an observant reader would realize, this means that the person who comes up with an algorithm has to think of all the possible situations that could come up. If there is a possibility of failure, they should have thought of that before hand, and put necessary conditions and statements in the algorithm so that the computer would not get stuck should a peculiar situation happens! Let’s consider the algorithm of dividing 2 numbers a and b, specified by the user. How would the algorithm look like?

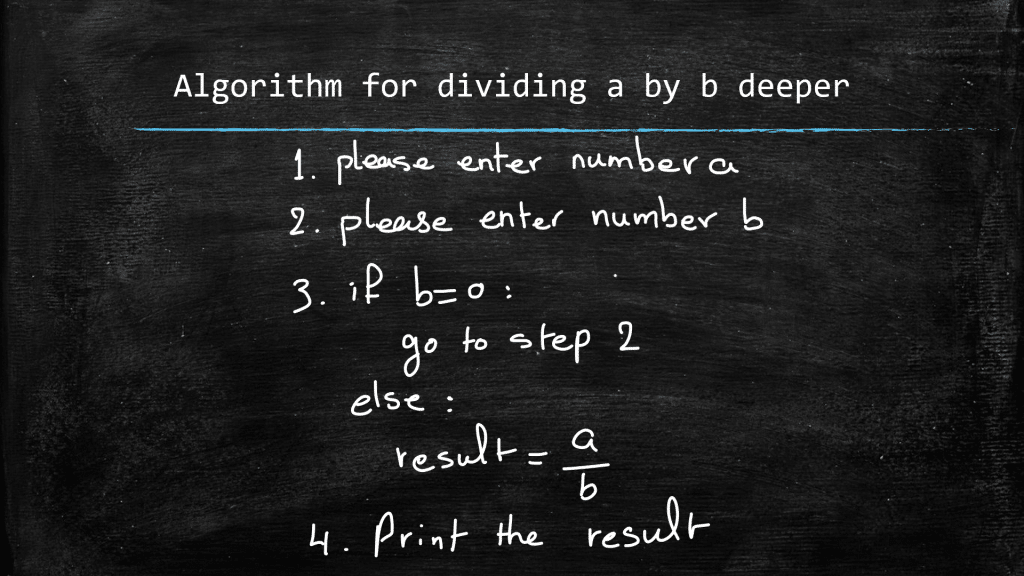

Now, have we thought of all the things that could go wrong and make the computer throw an error and basically get stuck? How about of the user inputs digit 0 for number b? We know that you cannot divide a number by 0. So, how should be modify our algorithm to address this situation?

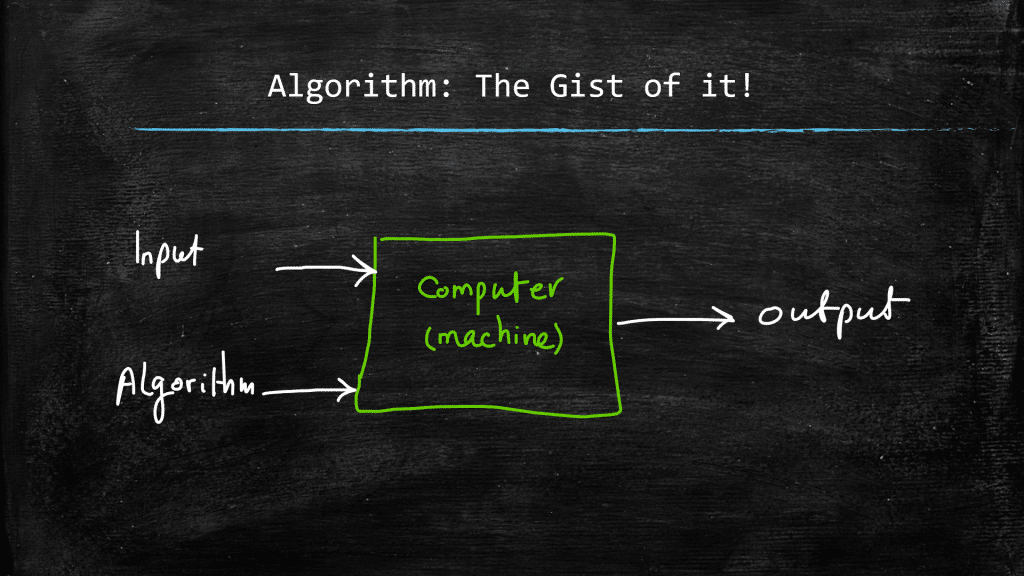

Great! So we can conclude that, an algorithm is the way of doing a certain task on the input that is provided to it, and it will spit out the output that we are looking for. So, this implies that since we are the authors of the algorithm, we know exactly the “the way” part with all its intricacies, and we also have access to the inputs but looking for the outputs. Now, this is a ridiculously easy problem and writing the algorithm for it is quite simple.

When does an algorithm FAIL?

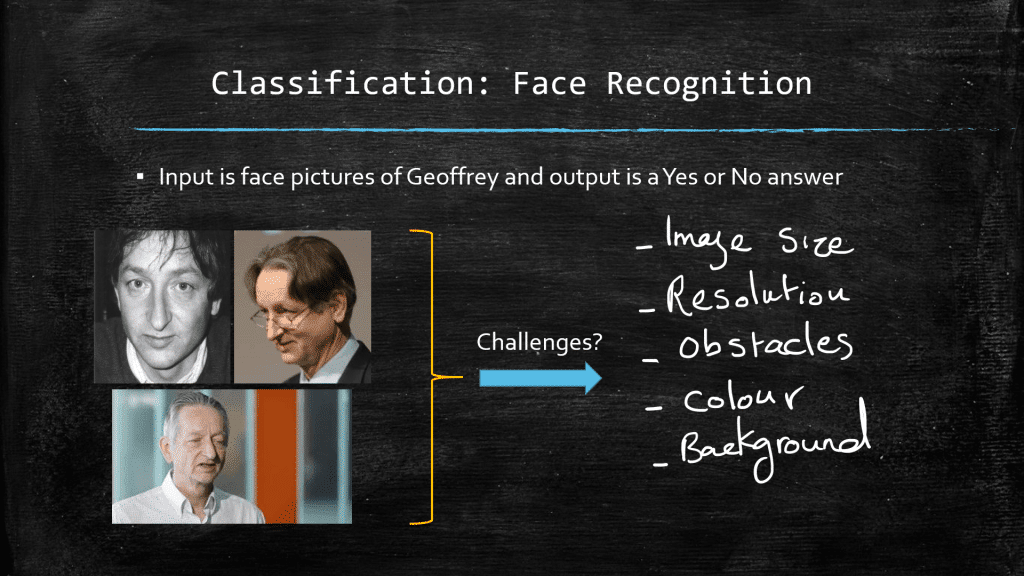

The short answer is when we don’t know all possible situations that could happen! For instance, let’s say we want to make our machine capable of detecting the picture of Geoffrey Hinton. What would be the input? Well pictures of Geoffrey! What are you hoping to get for the output? A simple answer: Yes it is Geoffrey / No it is not!. But what is the algorithm to detect Geoffrey Hinton from an arbitrary image?! There is literally an infinite number of possibilities for Geoffrey to take a picture and if we some how extract the way (i.e., the algorithm) of detecting Geoffrey from 1000 images, it is not nearly enough as our method will surely fail if Geoffrey decides to take a very different picture one day!

What can be different in all these possible images? Lighting, angle, shade, occlusion, background, colors, …

So we cannot come up with an algorithm by considering all these variables (simply not possible)! This is where machine learning can help us!

What is Machine Learning?

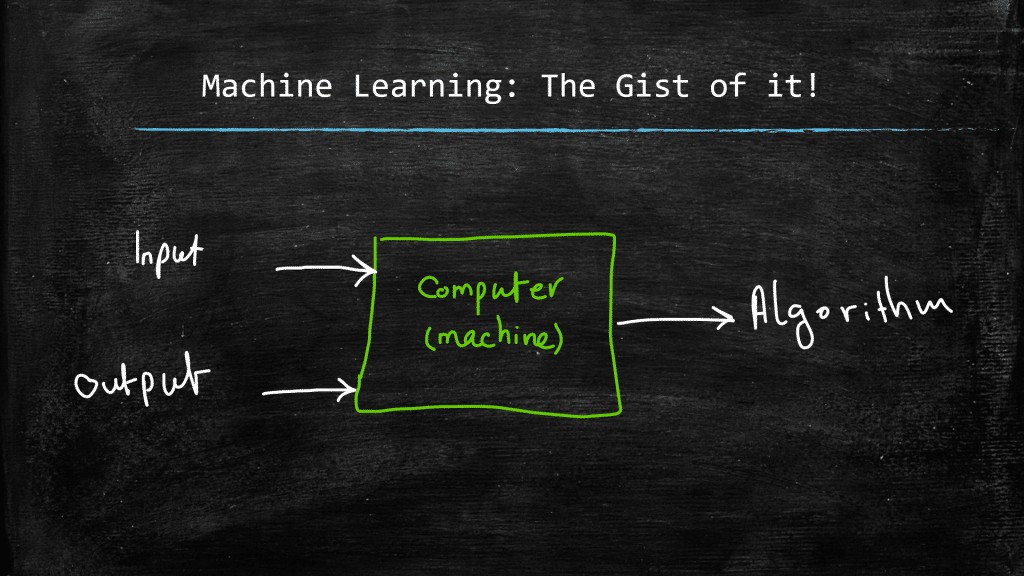

It is important to note that we (i.e., the machine learning community), believe that there is a hidden patterns, hidden function, or hidden rule that can map certain inputs to their corresponding outputs. There is a reason why certain images belong to Geoffrey! The pixels are NOT just randomly neighboring eachother! There is a rule that when applied, image pixels sit next to each other in a way that the result becomes Geoffrey! In machine learning we know the inputs (e.g., images), and the outputs (i.e., the labels namely Geoffrey in our example), but we do NOT know the algorithm that can determine if an input image belongs to Geoffrey or not. as a result, in machine learning, we show the machine the inputs and the desired outputs (i.e., the ground truth), and we as the machine to extract the hidden algorithm from the abundance of data that we have at hand. One might say, we are trying to compensate for our ignorance about the algorithm by using many many data! And that is indeed correct! Below, you can see the gist of machine learning and compare it to the gist of a simple algorithm, mentioned earlier in this post.

Some final remarks for those of you who are already familiar with machine learning: we don’t always have access to ground truth, and this post is merely an attempt to convey the big picture about machine learning by not focusing on the details.

Author: Mehran

Dr. Mehran H. Bazargani is a researcher and educator specialising in machine learning and computational neuroscience. He earned his Ph.D. from University College Dublin, where his research centered on semi-supervised anomaly detection through the application of One-Class Radial Basis Function (RBF) Networks. His academic foundation was laid with a Bachelor of Science degree in Information Technology, followed by a Master of Science in Computer Engineering from Eastern Mediterranean University, where he focused on molecular communication facilitated by relay nodes in nano wireless sensor networks. Dr. Bazargani’s research interests are situated at the intersection of artificial intelligence and neuroscience, with an emphasis on developing brain-inspired artificial neural networks grounded in the Free Energy Principle. His work aims to model human cognition, including perception, decision-making, and planning, by integrating advanced concepts such as predictive coding and active inference. As a NeuroInsight Marie Skłodowska-Curie Fellow, Dr. Bazargani is currently investigating the mechanisms underlying hallucinations, conceptualising them as instances of false inference about the environment. His research seeks to address this phenomenon in neuropsychiatric disorders by employing brain-inspired AI models, notably predictive coding (PC) networks, to simulate hallucinatory experiences in human perception.

Responses

The post was very helpful for those who want to refresh themselves on algorithms vs machine learning. You gave concise explanations and concise examples that made it easy to follow. Looking forward to reading more of your content in the future.thank you

we nearlearn

are one of the top 10 best machine learning training center in banglore, india.

Great Information about machine learning. Thanks for sharing