Dissecting Relu: A desceptively simple activation function

What is this post about? This is what you will be able to generate and understand by the end of this post. This is the

What is this post about? This is what you will be able to generate and

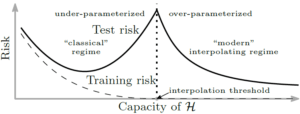

We never Had Truly Understood the Bias-Variance Trade-off!!! In this interview with Prof. Mikhail Belkin,

What is This post about? The interview with the lead author of the paper: Prof.

“There are a lot more papers written than there are widely read!” (Tom Mitchell) Prof.

What will you learn? The video below, delivers the main points of this blog post

What will you learn? This post is also available to you in this video, should

What is this post about? This is what you will be able to generate and understand by the end of this post. This is the

We never Had Truly Understood the Bias-Variance Trade-off!!! In this interview with Prof. Mikhail Belkin, we will discuss his amazing paper: “Reconciling modern machine learning

What is This post about? The interview with the lead author of the paper: Prof. Mikhail Belkin Together we will analyze an amazing paper entitled:

“There are a lot more papers written than there are widely read!” (Tom Mitchell) Prof. Tom Mitchell is one of the giants of machine learning

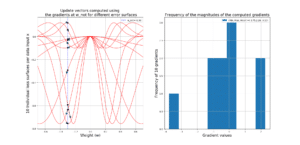

What will you learn? The video below, delivers the main points of this blog post on Stochastic Gradient Descent (SGD): (GitHub code available in here)

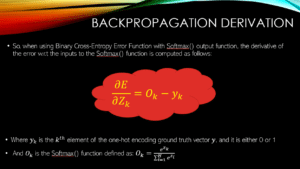

What will you learn? This post is also available to you in this video, should you be interested 😉 https://www.youtube.com/watch?v=znqbtL0fRA0&feature=youtu.be In our previous post, we